Running large-scale applications can be a challenge, especially when it comes to ensuring optimal server performance. As your user base grows and your application becomes more complex, the demands on your servers increase significantly. If you’re not careful, you could experience slow loading times, frequent crashes, and even downtime, leading to a frustrated user base and lost revenue. This article will explore key strategies for optimizing server performance to handle the demands of large-scale applications. We’ll discuss everything from hardware optimization to software configurations and best practices for ensuring a smooth and efficient user experience.

Understanding the Demands of Large-Scale Applications

In the rapidly evolving landscape of software development, large-scale applications have become ubiquitous, transforming the way we live, work, and interact with the world. From social media platforms to e-commerce giants, these applications handle massive amounts of data, users, and transactions, placing significant demands on underlying infrastructure and software architecture. Understanding these demands is crucial for building scalable, reliable, and performant applications that can meet the expectations of users and businesses alike.

Scalability: Handling Growth and Demand

Scalability is the ability of an application to handle increasing loads without compromising performance. As user base and data volumes grow, applications need to adapt and scale seamlessly. This involves several key aspects:

- Horizontal Scaling: Adding more servers or instances to distribute the workload across multiple machines.

- Vertical Scaling: Upgrading the hardware resources of existing servers, such as CPU, memory, and storage.

- Load Balancing: Distributing incoming traffic evenly across multiple servers to prevent any single server from becoming overwhelmed.

- Caching: Storing frequently accessed data in memory or on a faster tier of storage to reduce latency and improve response times.

Reliability: Ensuring Availability and Resilience

Reliability is paramount for large-scale applications, as downtime can have significant financial and reputational consequences. Key considerations include:

- High Availability: Designing the application to remain operational even in the event of failures, such as server outages or network disruptions.

- Fault Tolerance: Implementing mechanisms to detect and recover from errors, preventing single points of failure.

- Data Replication: Maintaining multiple copies of data across different locations to ensure data consistency and prevent loss.

- Monitoring and Alerting: Continuously monitoring the application’s health and performance, and setting up alerts to notify developers of potential issues.

Performance: Delivering Fast and Responsive Experiences

Performance is essential for delivering a positive user experience. Large-scale applications need to respond quickly, even under high loads. Key factors influencing performance include:

- Database Optimization: Choosing the right database technology and optimizing database queries to minimize latency.

- Code Optimization: Writing efficient code and avoiding bottlenecks in the application logic.

- Content Delivery Networks (CDNs): Distributing content across multiple servers geographically to reduce latency for users worldwide.

- Asynchronous Processing: Using background tasks to handle long-running operations and prevent blocking the main application thread.

Security: Protecting Data and Users

Security is a critical concern for all applications, but it becomes even more important for large-scale systems that handle sensitive user data. Key considerations include:

- Authentication and Authorization: Implementing secure methods for verifying user identities and granting access to resources.

- Data Encryption: Encrypting sensitive data at rest and in transit to prevent unauthorized access.

- Vulnerability Scanning and Penetration Testing: Regularly scanning for vulnerabilities and conducting penetration tests to identify weaknesses.

- Security Monitoring and Incident Response: Monitoring for suspicious activity and implementing robust incident response plans.

Conclusion

Building and maintaining large-scale applications presents unique challenges, but understanding the demands of scalability, reliability, performance, and security is essential for success. By addressing these factors carefully, developers can create applications that are robust, efficient, and capable of handling the demands of a rapidly evolving digital world.

Key Performance Metrics to Monitor

In today’s data-driven world, it’s more important than ever for businesses to track and analyze key performance indicators (KPIs) to measure success and make informed decisions. KPIs are quantifiable metrics that reflect the performance of a specific business objective. By monitoring key metrics, businesses can gain valuable insights into their operations, identify areas for improvement, and ultimately drive growth.

There are many different KPIs that businesses can track, but some of the most important ones include:

Financial Metrics

- Revenue: The total amount of money a business earns from its sales.

- Profit: The difference between a business’s revenue and expenses.

- Return on Investment (ROI): A measure of how much profit a business generates for every dollar invested.

- Customer Acquisition Cost (CAC): The average cost of acquiring a new customer.

- Customer Lifetime Value (CLTV): The total amount of revenue a business expects to generate from a single customer over the course of their relationship.

Marketing Metrics

- Website Traffic: The number of visitors to a business’s website.

- Conversion Rate: The percentage of website visitors who complete a desired action, such as making a purchase or signing up for a newsletter.

- Social Media Engagement: The number of likes, comments, and shares a business receives on its social media posts.

- Email Open Rate: The percentage of recipients who open an email sent by a business.

- Click-Through Rate (CTR): The percentage of recipients who click on a link in an email or on a website.

Sales Metrics

- Sales Revenue: The total amount of money a business earns from its sales.

- Average Order Value (AOV): The average amount of money spent by a customer per order.

- Number of Sales: The total number of sales made by a business.

- Sales Cycle Length: The average amount of time it takes to close a sale.

- Customer Churn Rate: The percentage of customers who stop doing business with a company.

Operational Metrics

- Employee Productivity: A measure of how efficiently employees are working.

- Inventory Turnover: A measure of how quickly inventory is sold.

- Lead Time: The amount of time it takes to fulfill an order.

- Customer Service Response Time: The average amount of time it takes to respond to a customer inquiry.

- On-Time Delivery Rate: The percentage of orders that are delivered on time.

By tracking these and other key metrics, businesses can gain valuable insights into their performance and make data-driven decisions to improve their operations and achieve their goals. It’s important to note that the specific KPIs that are most important to monitor will vary depending on the business’s industry, size, and goals. However, by tracking the right metrics, businesses can gain a competitive advantage and drive sustainable growth.

Hardware Optimization Techniques

Hardware optimization refers to the process of improving the performance of a computer system by making changes to its physical components. This can involve upgrading components, adjusting settings, or even making changes to the system’s physical layout. By optimizing your hardware, you can make your computer run faster, more efficiently, and with fewer errors.

Common Hardware Optimization Techniques

There are many different techniques that can be used to optimize hardware. Some of the most common include:

- Upgrading your RAM: RAM is responsible for storing data that your computer needs to access quickly. Increasing your RAM can significantly improve performance, especially when multitasking or running demanding applications.

- Upgrading your CPU: The CPU is the brain of your computer. A faster CPU will allow your computer to process information more quickly. This is especially important for gaming, video editing, and other tasks that require significant processing power.

- Upgrading your storage: Your hard drive or SSD stores all of your data. Upgrading to a faster storage drive, such as an SSD, can dramatically improve your computer’s boot times and overall performance.

- Improving your cooling system: A well-cooled computer will run more efficiently and avoid overheating issues. This can involve adding more fans, upgrading your CPU cooler, or even using a liquid cooling system.

- Overclocking: Overclocking involves pushing your hardware components beyond their default clock speeds. This can result in significant performance gains, but it can also lead to instability or damage if not done correctly.

Benefits of Hardware Optimization

There are many benefits to optimizing your hardware, including:

- Improved performance: Hardware optimization can make your computer run faster and more efficiently. This can be especially beneficial for demanding tasks such as gaming, video editing, and software development.

- Increased lifespan: Keeping your hardware cool and well-maintained can help to extend its lifespan. This can save you money in the long run by preventing premature failures.

- Reduced energy consumption: Optimizing your hardware can also help to reduce your energy consumption. This is not only good for the environment, but it can also save you money on your energy bills.

- Enhanced stability: A well-optimized system is more likely to be stable and reliable. This can prevent crashes, freezes, and other issues that can disrupt your workflow.

Tips for Hardware Optimization

Here are some tips for optimizing your hardware:

- Monitor your system’s performance: Use tools like Task Manager to monitor your CPU, RAM, and storage usage. This will help you identify bottlenecks and areas where your system is struggling.

- Clean your computer regularly: Dust and debris can build up inside your computer, leading to overheating and performance issues. Make sure to clean your computer regularly, especially if you live in a dusty environment.

- Update your drivers: Outdated drivers can cause performance issues and instability. Make sure to keep your drivers up to date by visiting the manufacturer’s website.

- Use a defragmentation tool: Defragmentation tools can help to improve the performance of your hard drive. This is especially important for hard drives that are nearing capacity.

- Consider upgrading your hardware: If your computer is several years old, it may be time to consider upgrading your components to improve performance.

Hardware optimization is an essential process for keeping your computer running smoothly and efficiently. By following the tips above, you can ensure that your system is performing at its best.

Software Optimization Strategies

Software optimization is the process of improving the performance of a software application. It can involve a variety of techniques, such as reducing code complexity, improving algorithms, and optimizing data structures. By optimizing your software, you can improve its speed, efficiency, and overall user experience. This blog post will discuss several strategies for optimizing software and enhancing performance.

1. Code Optimization

One of the most important aspects of software optimization is code optimization. This involves writing code that is efficient and performs well. Here are a few tips for code optimization:

- Use efficient data structures: Choosing the right data structure can significantly impact your program’s performance. For example, if you need to store a list of items, an array might be more efficient than a linked list.

- Reduce the number of function calls: Function calls can be expensive, so it’s important to reduce the number of function calls in your code whenever possible.

- Use appropriate algorithms: Some algorithms are more efficient than others. For example, a binary search algorithm is more efficient than a linear search algorithm for searching a sorted array.

- Avoid unnecessary computations: If you’re performing the same computation multiple times, you can often save time by caching the result.

2. Database Optimization

If your software uses a database, it’s important to optimize the database for performance. Here are a few tips for database optimization:

- Use indexes: Indexes can speed up queries by allowing the database to quickly locate the data you need.

- Optimize queries: Make sure your queries are as efficient as possible. This can involve using appropriate joins, avoiding unnecessary columns, and using appropriate data types.

- Cache data: Caching data can reduce the number of queries that need to be made to the database.

- Use stored procedures: Stored procedures can improve performance by reducing the amount of data that needs to be transferred between the application and the database.

3. Network Optimization

If your software communicates with other systems over the network, it’s important to optimize network performance. Here are a few tips for network optimization:

- Reduce the amount of data transmitted: The more data you transmit, the slower your application will be. Try to reduce the amount of data transmitted by using compression or by only sending the data that is absolutely necessary.

- Use a content delivery network (CDN): CDNs can help to speed up the delivery of static content, such as images and JavaScript files.

- Use a load balancer: Load balancers can distribute traffic across multiple servers, which can help to improve performance and scalability.

4. User Interface (UI) Optimization

The user interface (UI) of your software can also have a significant impact on performance. Here are a few tips for UI optimization:

- Reduce the number of UI elements: The more UI elements you have, the more resources your application will need to render them.

- Use lazy loading: Lazy loading can help to improve performance by only loading UI elements when they are needed.

- Use animation sparingly: Animations can be visually appealing, but they can also impact performance. Use animations sparingly and make sure they are optimized for performance.

5. Profiling and Monitoring

Profiling and monitoring your software can help you identify bottlenecks and other areas where performance can be improved. There are a variety of profiling and monitoring tools available, and the best tool for you will depend on the specific needs of your application. Profiling tools help identify performance bottlenecks, and monitoring tools track performance metrics, such as response times, resource utilization, and error rates.

Conclusion

Software optimization is an ongoing process. By following the tips discussed in this blog post, you can improve the performance of your software and enhance the user experience. Remember to test your software thoroughly after making changes to ensure that you haven’t introduced any new bugs.

Database Performance Tuning

Database performance tuning is the process of optimizing a database system to improve its performance and efficiency. This can involve a variety of techniques, including:

- Indexing: Creating indexes on frequently accessed columns can significantly speed up queries.

- Query optimization: Rewriting queries to use more efficient algorithms can improve performance.

- Data normalization: Organizing data in a structured way can reduce data redundancy and improve query performance.

- Caching: Storing frequently accessed data in memory can reduce the need to access the disk, improving performance.

- Hardware upgrades: Upgrading the hardware, such as adding more memory or faster processors, can improve performance.

The specific techniques used to tune a database will depend on the specific database system, the application using the database, and the performance requirements of the application.

Benefits of Database Performance Tuning

There are many benefits to database performance tuning, including:

- Improved application performance: Faster database queries lead to faster application response times.

- Increased scalability: A tuned database can handle more users and data requests.

- Reduced hardware costs: By optimizing the database, you can potentially reduce the need for expensive hardware upgrades.

- Improved user experience: Users will experience faster application response times and a more responsive system.

Tips for Database Performance Tuning

Here are some tips for tuning your database:

- Monitor your database performance: Use tools to track key performance metrics, such as query execution time, disk I/O, and memory usage.

- Analyze your database queries: Identify slow queries and look for ways to optimize them.

- Use a database profiler: A profiler can help you identify performance bottlenecks in your code.

- Test changes carefully: Before making any significant changes to your database, test them in a development or staging environment.

Database performance tuning is an ongoing process. As your application changes and your data grows, you will need to continue to monitor and tune your database to ensure optimal performance.

Network Optimization for High Throughput

In today’s digital age, network performance is paramount. As businesses and individuals rely increasingly on online services, the demand for high throughput networks has skyrocketed. Network optimization is the process of fine-tuning a network to improve its efficiency and performance, resulting in faster data transfer speeds, reduced latency, and enhanced reliability. This article will delve into key strategies for achieving high throughput in your network.

Understanding Throughput

Throughput refers to the amount of data that can be transferred over a network connection within a given time frame. It’s typically measured in bits per second (bps) or megabits per second (Mbps). High throughput is essential for various applications, including:

- Streaming video and audio

- Downloading large files

- Online gaming

- Cloud computing

- Video conferencing

Optimization Strategies

Optimizing a network for high throughput involves a multifaceted approach. Here are some critical strategies:

1. Bandwidth Management

Bandwidth is the capacity of a network connection. Efficient bandwidth management is crucial to ensure that resources are allocated appropriately. This can involve:

- Prioritizing traffic: Assigning higher priority to essential applications like video conferencing or critical data transfer.

- Traffic shaping: Controlling the amount of data that can be transmitted during specific time periods to prevent congestion.

- Quality of Service (QoS): Implementing QoS policies to guarantee a minimum level of bandwidth for certain applications.

2. Network Infrastructure Upgrade

Outdated network equipment can significantly hinder throughput. Upgrading to modern routers, switches, and cabling can provide significant performance gains. Consider these aspects:

- Router capabilities: Ensure your router supports the latest network protocols and has sufficient processing power.

- Switch capacity: Choose switches with enough ports and bandwidth to accommodate your network’s needs.

- Cabling type: Opt for high-quality, shielded cables like Cat6a or Cat7 for optimal data transmission.

3. Wireless Network Optimization

For wireless networks, optimizing signal strength and reducing interference is crucial. Here are some key strategies:

- Optimal router placement: Position your router in a central location, away from walls and obstacles.

- Avoid interference: Minimize interference from other wireless devices, microwaves, and cordless phones.

- Channel selection: Choose a less crowded Wi-Fi channel to reduce interference.

- Access point deployment: For large areas, consider deploying multiple access points to extend coverage and improve signal strength.

4. Network Monitoring and Analysis

Monitoring network performance is essential to identify potential bottlenecks and areas for improvement. Use network monitoring tools to track:

- Throughput metrics: Monitor real-time data transfer speeds.

- Latency: Measure the delay in data transmission.

- Packet loss: Identify instances where data packets are lost in transit.

- Network utilization: Track the amount of bandwidth being used.

Conclusion

Achieving high network throughput is a continuous process that requires careful planning, monitoring, and optimization. By implementing these strategies, you can significantly improve your network’s performance, ensuring smooth and efficient operation for your critical applications.

Caching Strategies for Improved Response Times

In the fast-paced world of web development, delivering content quickly is paramount. Users expect websites to load instantaneously, and slow response times can lead to frustration, bounce rates, and ultimately, lost revenue. Caching is a powerful technique that plays a crucial role in optimizing website performance and delivering a seamless user experience. This article will delve into various caching strategies and their benefits.

What is Caching?

Caching is a mechanism that stores copies of frequently accessed data in a temporary storage location, known as a cache. When a user requests a resource, the server first checks the cache. If the data is available in the cache, it is served directly, bypassing the need to fetch it from the original source. This significantly reduces latency and improves response times.

Types of Caching

There are several types of caching techniques, each with its own advantages and use cases:

1. Browser Caching

Browser caching stores static resources like images, CSS, and JavaScript files in the user’s browser. When a user revisits the same page, the browser retrieves these files from the cache instead of re-downloading them, resulting in faster loading times. This is often configured using HTTP headers like Cache-Control and Expires.

2. Server-Side Caching

Server-side caching involves storing dynamically generated content on the server itself. This can be done using various techniques like:

- Object caching: Storing entire objects like database records or API responses in memory.

- Page caching: Caching complete HTML pages for faster retrieval. This is particularly beneficial for pages with minimal dynamic content.

- Fragment caching: Caching specific sections of a page, allowing for partial updates.

3. Content Delivery Network (CDN) Caching

CDNs are geographically distributed networks of servers that store copies of your website’s content closer to users. When a user requests content, the CDN server nearest to them serves it, minimizing latency and improving performance.

Benefits of Caching

Caching offers numerous benefits for websites, including:

- Improved Response Times: Reduces the time it takes to load content by serving it from the cache.

- Reduced Server Load: Fewer requests are sent to the origin server, leading to less processing overhead.

- Enhanced Scalability: Caching can handle large amounts of traffic, ensuring optimal performance even during peak hours.

- Lower Bandwidth Costs: By minimizing data transfer, caching reduces bandwidth consumption and associated costs.

Implementing Caching

Implementing caching can vary depending on the chosen strategy. Some common methods include:

- HTTP Headers: Configuring

Cache-Control,Expires, and other headers to control caching behavior. - Caching Plugins: Using plugins or libraries specifically designed for caching.

- Configuration Management Tools: Leveraging tools to manage cache settings across multiple environments.

Conclusion

Caching is an indispensable technique for optimizing website performance. By leveraging different caching strategies, developers can significantly improve response times, reduce server load, and enhance user experience. Choosing the appropriate caching strategy depends on the specific needs of your application and the resources available. It is crucial to understand the advantages and limitations of each strategy to effectively implement caching for a faster, more scalable website.

Load Balancing for Scalability

In the world of web applications and services, scalability is paramount. As your application gains popularity and user base, it needs to be able to handle the increasing traffic without sacrificing performance. This is where load balancing comes into play. Load balancing is a technique that distributes incoming traffic across multiple servers, ensuring that no single server becomes overwhelmed. It acts as a traffic cop, directing requests to the most appropriate server, optimizing resource utilization and improving overall performance.

Here are some key benefits of using load balancing:

- Increased Scalability: By distributing traffic across multiple servers, load balancing allows your application to handle more requests, making it more scalable and responsive to spikes in demand.

- Enhanced Performance: Load balancing can reduce server load and latency, resulting in faster response times and improved user experience.

- High Availability: By distributing traffic across multiple servers, load balancing ensures that your application remains available even if one server goes down. This redundancy prevents downtime and ensures continuous service.

- Improved Security: Load balancers can act as a single point of entry for incoming traffic, allowing you to implement security measures like firewalls and intrusion detection systems more effectively.

Load balancing methods can be broadly classified into two types:

Types of Load Balancing

- Hardware Load Balancers: These are dedicated appliances that handle the load balancing process. They are generally more expensive but offer high performance and reliability.

- Software Load Balancers: These are software solutions that run on servers and perform load balancing functions. They are more cost-effective but may have performance limitations compared to hardware load balancers.

Choosing the right load balancing approach depends on your specific needs, budget, and technical expertise.

In conclusion, load balancing is an essential technique for achieving scalability, performance, and high availability for your web applications. By distributing traffic across multiple servers, load balancing ensures that your application can handle increasing demands and provide a seamless user experience.

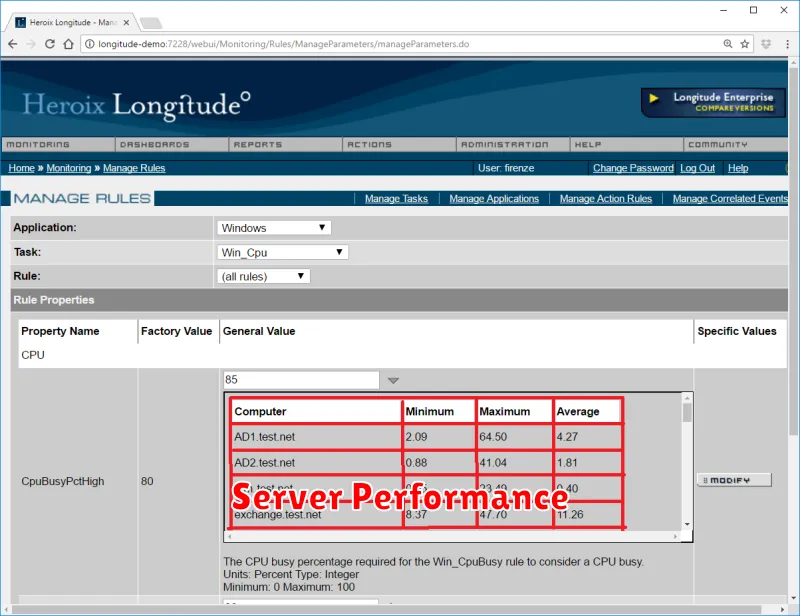

Performance Monitoring and Troubleshooting

In today’s fast-paced digital world, ensuring the smooth operation and optimal performance of applications and systems is crucial. Any disruptions or performance bottlenecks can have significant negative impacts on user experience, revenue, and brand reputation. This is where performance monitoring and troubleshooting come into play, playing a critical role in maintaining the health and efficiency of our systems.

The Importance of Performance Monitoring

Performance monitoring involves the continuous collection and analysis of data related to various aspects of system performance, such as:

- Response times

- Resource utilization (CPU, memory, disk)

- Network bandwidth

- Error rates

- User activity

By tracking these metrics, we gain valuable insights into the overall health and performance of our systems. This data helps us identify potential issues early on, before they escalate into major problems.

Troubleshooting Performance Issues

When performance issues arise, effective troubleshooting is essential to diagnose the root cause and implement appropriate solutions. This typically involves a systematic approach:

- Identify the problem: Analyze the performance metrics and gather information about the issue.

- Isolate the source: Determine which component or service is responsible for the performance degradation.

- Diagnose the cause: Investigate the underlying reasons for the issue, such as code bugs, hardware failures, or configuration errors.

- Implement solutions: Apply appropriate fixes, such as code changes, resource optimization, or system upgrades.

- Monitor and evaluate: Track the impact of the implemented solutions and ensure they have resolved the issue.

Best Practices for Performance Monitoring and Troubleshooting

To effectively monitor and troubleshoot performance issues, consider the following best practices:

- Establish clear performance baselines: Define expected performance levels and metrics to track deviations.

- Use comprehensive monitoring tools: Leverage tools that provide real-time insights into various system components and metrics.

- Implement alerting mechanisms: Set up alerts to notify you of critical issues and trigger immediate responses.

- Document troubleshooting steps: Maintain a record of troubleshooting efforts for future reference and knowledge sharing.

- Continuous improvement: Regularly review performance data and make adjustments to optimize system performance.

Performance monitoring and troubleshooting are indispensable for ensuring the reliability and efficiency of our systems. By proactively monitoring performance, identifying issues early on, and applying effective troubleshooting techniques, we can maintain a positive user experience and achieve our business objectives.